A few months back, we answered the question of why processed blockchain data can make a massive difference when it comes to analyzing digital assets and web3 activity. Feel free to check it out:

https://dexterlab.com/why-would-you-pay-for-blockchain-data/

Today, let’s dive a little bit deeper. Why did we start the DEXterLab journey by building a web3 data warehouse? It takes a lot of time, effort, and money. Plus, there are data lake APIs available. We could just plug them in and move on toward the next big milestone. Most web3 data tools use APIs. Why can’t we?

In fact, when we started to work on the DEXterlab, that was the plan. But very quickly we ran into some problems.

Cost

First, the pricing plans of those API tools don’t suit our ambitious vision. Even the enterprise pricing with millions of tps per month in the plan would limit our unified data sets capabilities to the extent that there’s no point even starting building them.

What is the unified data set?

I’m glad you asked.

In short – a unified data set is when fragmented data sources are merged into one. Just think how much data events there are on a blockchain. DEX swaps, DeFi, NFT mints, sales, staking, p2e activity, bots, transfers between wallets, bridging from one L1 to another.

That’s millions of actions by millions of users, and we think every single action on a blockchain matters. Building data sets out of the activities and relationships between them is impossible with currently available web3 data lakes.

Speed

Then, there’s speed. If you have used any third-party analytics tools, you might have noticed that data is lagging. Even the most straightforward thing, like NFT floor price, might take time to update on some platforms. Sometimes an unacceptable amount of time.

Delay is not an option. The data set must be powered by real-time activity!

History

Another limitation of current APIs is that they cannot provide historical blockchain activity. For example, it isn't possible to get a wallet state at any time in history with complete lists of assets, including staked assets and their value.

By now, our warehouse already has historical Ethereum activity, including data from its L2s, Solana is on the way, and other major L1s will follow.

Now that we clarified the WHY let’s look at HOW.

Architecture

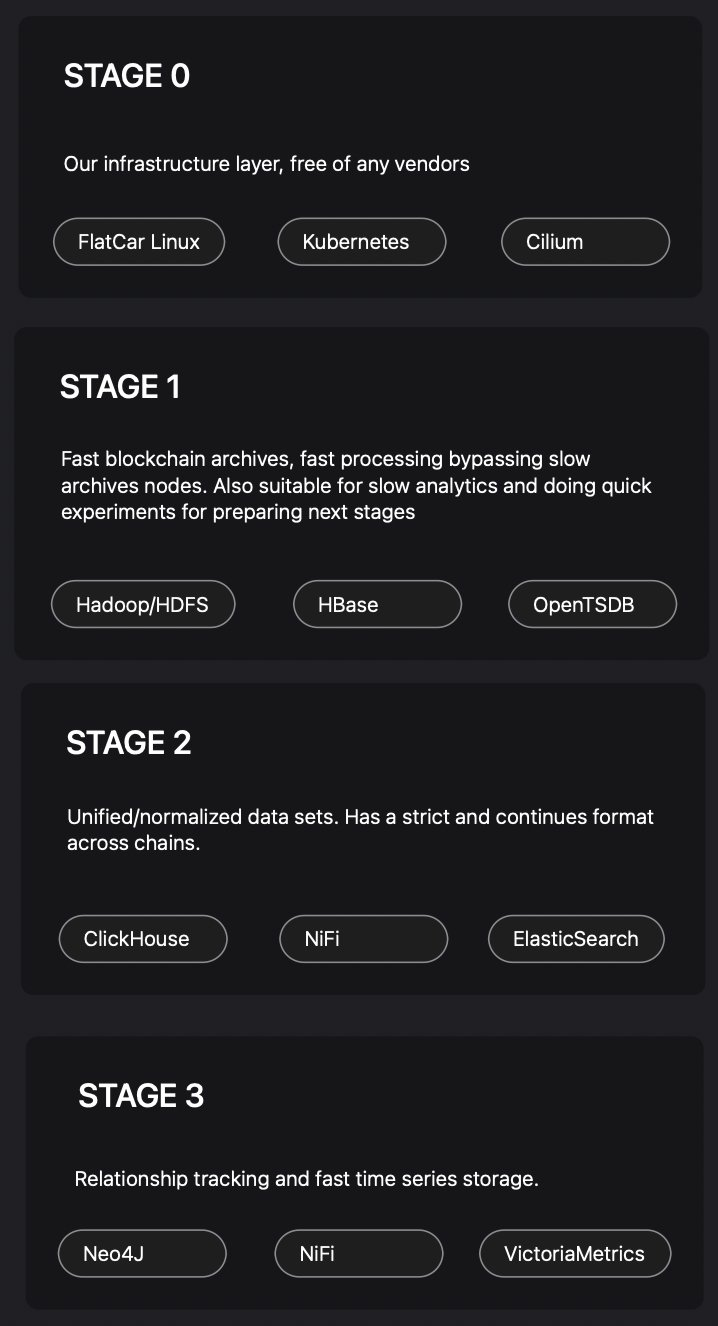

We always keep in mind a few things when choosing services and these are:

- Vendor-free, no AWS, GCE, etc...

- Only open-source

- Easy to replicate in other DC's

We deploy everything on bare metal servers, using a minimal setup of vanilla Kubernetes, Cilium, OpenEBS and a shit load of Kubernetes Operators and running everything on FlatCar.

Currently, playbooks and automation are working with OVH and Hetzner DC's.

For ETL, we use Apache NiFi, for which we already wrote a bunch of processors. Where we need more speed, we use NoFlo. The messenger here is, of course, Kafka.

When it comes to keeping a raw blockchain history, we use Hadoop (HDFS) + HBase.

Sorted and polished data sets stored in ClickHouse and some of them in ElasticSearch (when we need that fast Lucene indexes) and Neo4J for relationship keeps.

And the last category in our setup is time series databases (TSDB). Our favorite one is VictoriaMetrics.

Also, as we already run a big HDFS setup with HBase, and on top of it, we added OpenTSDB, too. It serves more as an archive in case of fire.

What’s in it for you?

When it comes to developers, the answer is pretty straightforward. We solve the problems that limit other builders. The problems we know well.

Besides the core data events like value, swap direction, floor price, and stuff like that, we also offer enriched data sets. We can tailor them to specific needs and projects or provide our branded data sets powered by more complex activities and relationships between them.

All of it in a single API covering multiple blockchains!

Those internal data sets we’re working on can serve specific purposes like signaling about cautious activity, providing actionable insights, wallet profiling and much more. Ultimately, our goal is to make the whole crypto world safer, more fun, and fairer for retail participants who often end up being exit liquidity for institutions and whales.

Last but not least is THE COMMUNITY! NFT holders are our Familia, who will reap the rewards from the created value.

Become a part of DEXterLabs Familia >> discord.gg/rJfMWMSbgh.